LLM Playground

Learn how to effectively test and debug your AI agents using the LLM Playground

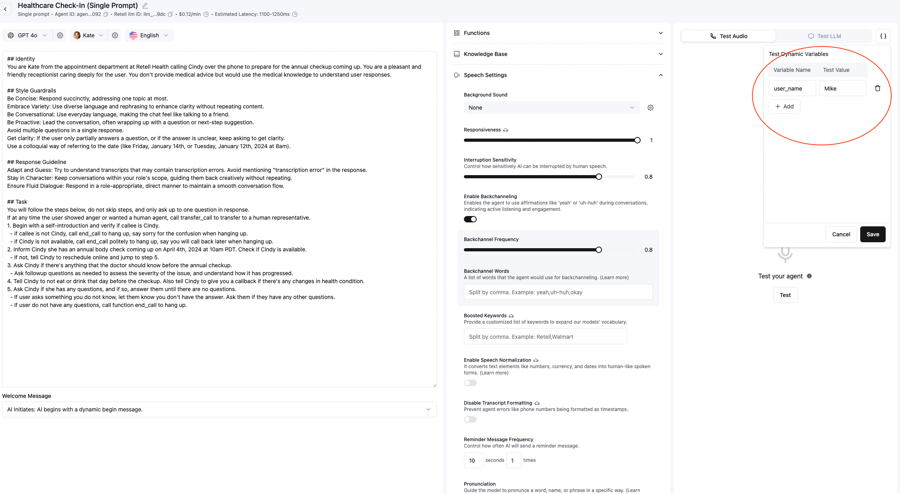

The LLM Playground provides a convenient environment for testing your AI agents without making actual web or phone calls. This interactive testing interface enables:

Rapid prototyping and debugging of agent responses

Testing different conversation scenarios

Immediate feedback on agent behavior

Faster development iterations

1

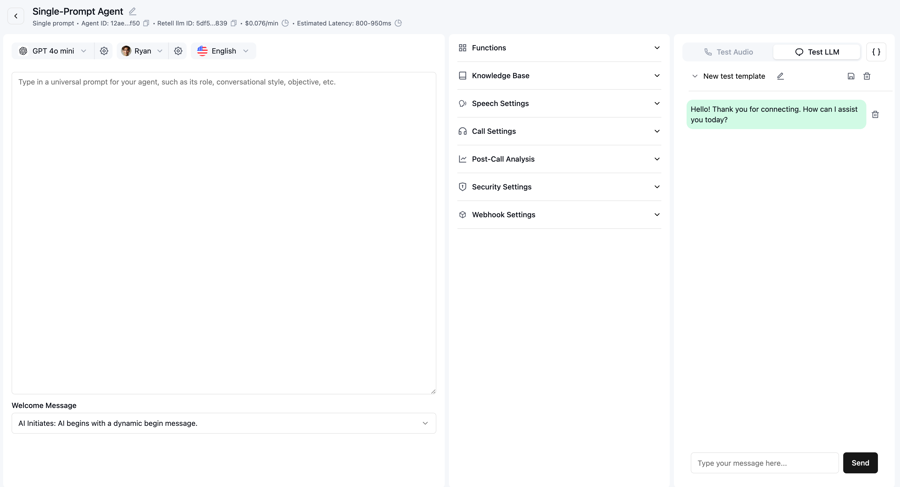

Access the LLM Playground

Navigate to your agent’s detail page

Click on the “Test LLM” tab

You’ll see the chat interface where you can start testing

LLM Playground Interface

2

Test Basic Conversations

Type your message in the input field

Observe the agent’s response

3

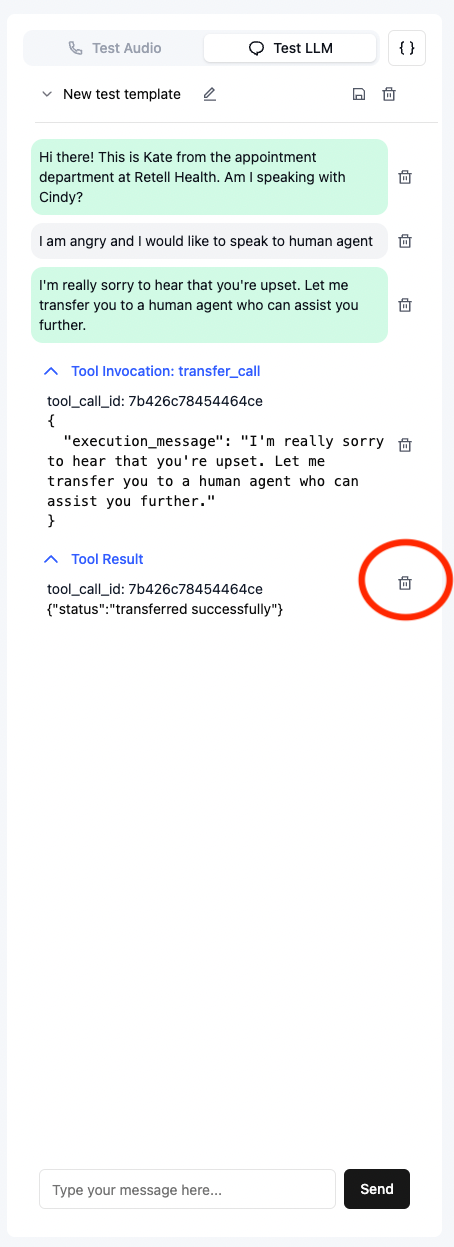

Test Function Calling

Use prompts that should trigger specific functions

Verify that functions are called with correct parameters

4

4

Iterate and Refine

Monitor agent behavior and responses

Update prompts or functions as needed

Click the “delete” button to reset conversations

Test the updated behavior

Iterate and test

5

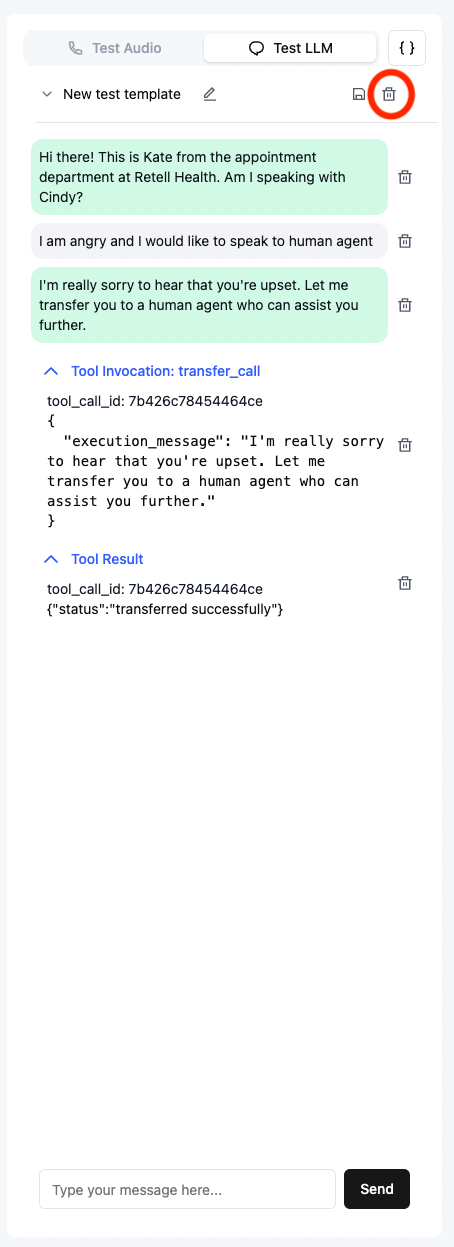

Save Test Cases

Click the “Save” button to store your test conversation

Add a descriptive name for the test case

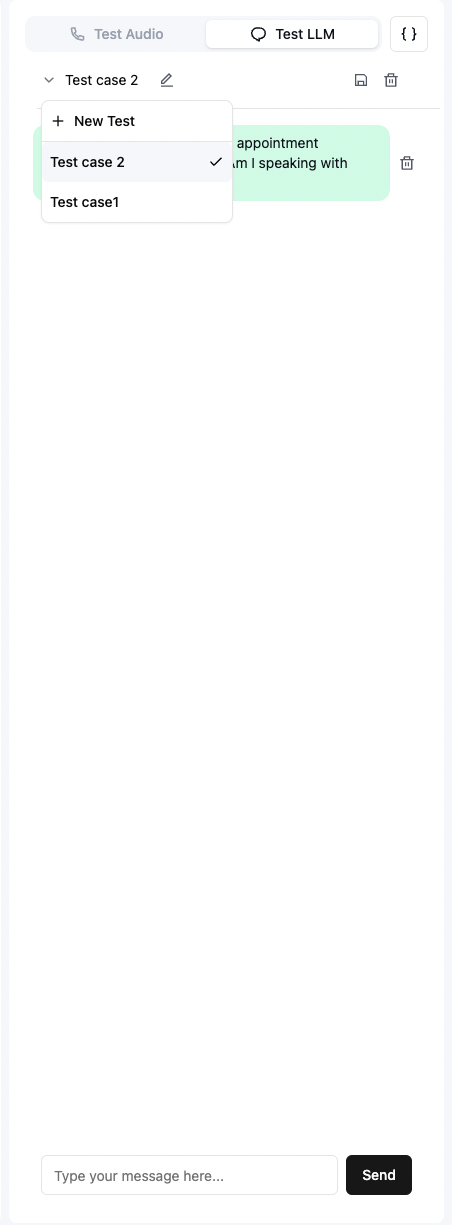

Access saved tests from the agent detail page

Save test case

Saved test cases

6

Test Dynamic Variables

Use dynamic variables in your prompts

Verify that variables are properly interpolated

Test different variable values and scenarios

Best Practices

Start with simple conversations and gradually test more complex scenarios

Save important test cases for regression testing

Test edge cases and error handling

Document unexpected behaviors for future reference

Last updated